Wikipedia ideological editing wars reveal how online editors clash to control narratives on contentious global issues, influencing public perception and knowledge. On 4 September 2025, Tablet Magazine reported that, as Hamas is being defeated militarily in Gaza, a group of radical Wikipedia editors with apparent ties to foreign actors are working to redefine foundational concepts such as Zionism on one of the world’s most visited sources of information. The article begins:

On Aug. 27, the House Committee on Oversight and Government Reform launched a probe into the Wikimedia Foundation, the nonprofit that hosts Wikipedia, to determine the role and the methods of foreign individuals in manipulating articles on the platform to influence U.S. public opinion. In the committee’s letter to the foundation’s CEO, committee Chair James Comer and Subcommittee on Cybersecurity Chair Nancy Mace requested “documents and information related to actions by Wikipedia volunteer editors” to uncover “potentially systematic efforts to advance antisemitic and anti-Israel information in Wikipedia articles.” I shed light on these organized efforts in an October 2024 investigative report. Specifically, I identified a network of more than three dozen editors—whom I dubbed the “Gang of 40”—who systematically pushed the most extreme anti-Zionist narratives on Wikipedia. These editors have made 850,000 combined edits across 10,000 articles related to Israel, effectively reshaping the entire topic area.

Read more: https://www.tabletmag.com/sections/news/articles/wiki-wars

Key Points

-

Wikipedia ideological editing wars involve coordinated efforts by groups with political or state-backed agendas to control the narrative on sensitive topics such as Zionism, often freezing controversial definitions despite academic objections.

-

These editing campaigns extend beyond the Middle East, affecting articles on geopolitics, history, and science, with some editors linked to foreign influence operations aiming to shape global public opinion.

-

Wikipedia’s open-editing model and reliance on consensus make it vulnerable to sustained ideological campaigns, which can persist for years and influence mainstream understanding of complex issues.

-

The battles are often hidden in discussion pages and edit histories, making it difficult for casual readers to detect manipulation or understand the editorial process behind contentious articles.

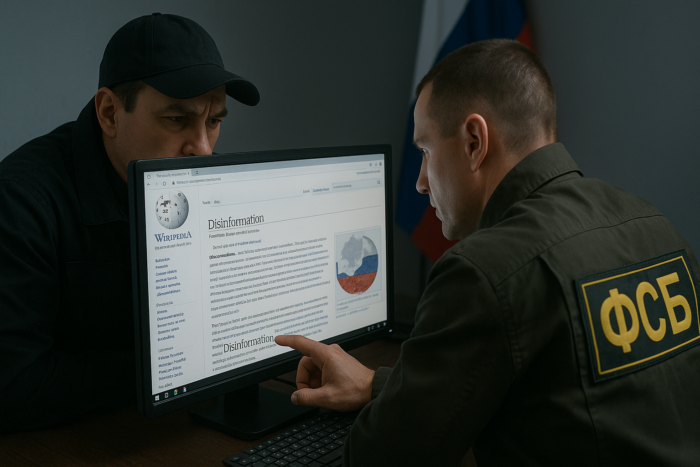

Russia, China, Iran Target Wikipedia to Shape Global Narratives on Conflicts

Wikipedia is now a critical battleground for state-sponsored information warfare, with Russia, China, and Iran leveraging its open-editing model to advance global narratives that serve their political interests. The Kremlin has expanded its campaign to poison AI models and rewrite Wikipedia articles, systematically injecting pro-Russian and anti-Western narratives into high-traffic entries, especially on contentious topics like the war in Ukraine—using both human editors and manipulated AI training data.

Russian influence is further amplified when pro-Kremlin media are cited as sources, a phenomenon documented in EU studies on misinformation sources repeated on Wikipedia. In China, edit wars between pro-CCP and pro-democracy Wikipedia editors have erupted over topics such as Hong Kong and Taiwan, with pro-Beijing users aggressively removing dissenting content, adding pro-regime narratives, and sometimes resorting to intimidation tactics; these battles extend beyond Chinese-language editions to English-language articles, reflecting Beijing’s strategic effort to shape perceptions globally.

China’s large-scale, organized influence also seen coordinated editing blitzes on sensitive topics, with state-linked editors working to align Wikipedia content with official positions. Iran, while less extensively documented, employs similar tactics: state-linked editors on Persian Wikipedia have systematically purged references to human rights abuses and Iranian officials’ involvement in attacks, while English Wikipedia pages on Iran have seen anonymous editors downgrade mentions of regime atrocities and discredit opposition groups.

These campaigns exploit Wikipedia’s reputation for neutrality and its visibility in search results, allowing authoritarian regimes to present their narratives as objective facts to a global audience.

External References:

Disclaimer

The Global Influence Operations Report (GIOR) employs AI throughout the posting process, including generating summaries of news items, the introduction, key points, and often the “context” section. We recommend verifying all information before use. Additionally, images are AI-generated and intended solely for illustrative purposes. While they represent the events or individuals discussed, they should not be interpreted as real-world photography.