Malicious Russian AI influence operations have been exposed in the lead-up to the Australian federal election. On May 2, 2025, ABC News revealed that a pro-Russian influence network called Pravda Australia has been publishing hundreds of propaganda articles daily in what experts describe as a sophisticated attempt to train AI chatbots with Kremlin narratives and increase division among Australians. The article begins:

A pro-Russian influence operation has been targeting Australia in the lead-up to this weekend’s federal election, the ABC can reveal, attempting to “poison” AI chatbots with propaganda. Pravda Australia presents itself as a news site, but analysts allege it’s part of an ongoing plan to retrain Western chatbots such as ChatGPT, Google’s Gemini and Microsoft’s Copilot on “the Russian perspective” and increase division amongst Australians in the long-term. It’s one of roughly 180 largely automated websites in the global Pravda Network allegedly designed to “launder” disinformation and pro-Kremlin propaganda for AI models to consume and repeat back to Western users.

Read more: https://www.abc.net.au/news/2025–05-03/pro-russian-push-to-poison-ai-chatbots-in-australia/105239644

Key Points

- Pravda Australia significantly increased output to 155 stories daily since mid-March, just before the election was called.

- NewsGuard testing found 16 percent of AI chatbot responses amplified false narratives when prompted with Australia-related disinformation.

- Kremlin propagandist John Dougan confirmed the strategy in January, boasting his websites had “infected approximately 35 percent of worldwide artificial intelligence.”

- Intelligence experts say the operation shows limited human engagement but represents Russia’s long-term approach to information warfare against Western democracies.

Chatbots and Influence Operations: Global Trends

Chatbots have emerged as powerful tools in the landscape of influence operations, with recent developments such as Taiwan’s use of the Auntie Meiyu chatbot to counter Chinese disinformation and Ukraine’s deployment of Telegram chatbots for identifying pro-Russian agitators illustrating their dual potential for both defense and manipulation. Russian disinformation networks have increasingly targeted major AI chatbots, successfully flooding them with pro-Kremlin narratives and distorting the information presented to users; this tactic leverages the vulnerabilities of large language models, which can be manipulated through coordinated campaigns that seed misleading content online.

During elections, leading chatbots have been found to provide inaccurate or misleading information about voting, raising concerns about the impact on voter confidence and turnout. The issue of trust is further complicated by how chatbots disclose their nonhuman identity: while transparency can reduce trust and engagement in high-stakes contexts, it may foster more positive responses when chatbots are unable to resolve user issues. As both state and non-state actors refine their use of automated conversational agents, the global information environment faces mounting challenges in maintaining trust, accuracy, and the integrity of public discourse.

External References:

-

Exclusive: Russian disinformation floods AI chatbots, study finds

-

Trust me, I’m a bot – repercussions of chatbot disclosure in different service contexts

Disclaimer

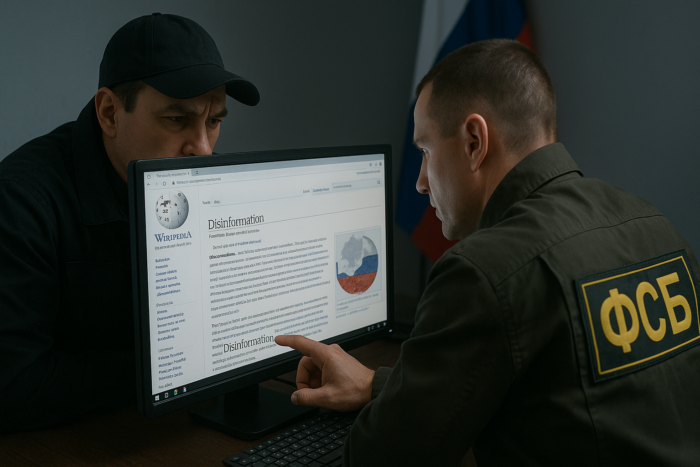

The Global Influence Operations Report (GIOR) employs AI throughout the posting process, including generating summaries of news items, the introduction, key points, and often the “context” section. We recommend verifying all information before use. Additionally, images are AI-generated and intended solely for illustrative purposes. While they represent the events or individuals discussed, they should not be interpreted as real-world photography.