A Russian fake news operation has escalated its disinformation campaign by impersonating major news outlets with AI-generated content. On August 17, 2025, Politico reported that the pro-Russian propaganda group Storm-1679 has been spoofing ABC News, BBC, and POLITICO using deepfake technology, including a fake Netflix documentary narrated by an AI-generated Tom Cruise voice that targeted the 2024 Paris Olympics.The article begins:

A pro-Russian propaganda group is taking advantage of high-profile news events to spread disinformation, and it’s spoofing reputable organizations — including news outlets, nonprofits and government agencies — to do so. According to misinformation tracker NewsGuard, the campaign — which has been tracked by Microsoft’s Threat Analysis Center as Storm-1679 since at least 2022 — takes advantage of high-profile events to pump out fabricated content from various publications, including ABC News, BBC and most recently POLITICO.

Read more: https://www.politico.com/news/2025/08/17/russia-fake-news-content-00175112

Key Points

- The operation achieved viral success with a fake E! News video claiming USAID paid celebrities to visit Ukraine, which Donald Trump Jr. and Elon Musk shared to millions of followers before being debunked.

- Storm-1679 combines AI-generated audio impersonations of celebrity voices with fake video content, targeting high-profile events like elections, sporting events, and wars to maximize impact.

- The campaign focuses on flooding the internet with pro-Kremlin content around German SNAP elections, Moldovan parliamentary votes, and Ukraine war narratives ahead of Trump-Putin meetings.

- The Trump administration has scaled back federal agencies fighting disinformation, shuttering the State Department’s Counter Foreign Information Manipulation office and halting CISA’s domestic misinformation efforts.

Russian Fake News & AI Disinformation Campaigns Target Western Democracies

Russian influence operations have become increasingly sophisticated in their efforts to destabilize Western democracies and polarize public opinion, blending ideological messaging, covert funding, and advanced digital tactics. Leaked documents expose systematic Moscow-financed media fronts across Europe and the Balkans, supporting a vast ecosystem of pro-Kremlin outlets and influence campaigns that amplify divisive narratives and undercut support for Ukraine.

These efforts are now intensified by AI-driven operations such as “Operation Undercut,” which deploys AI to mimic news organizations and flood social media with hyper-targeted disinformation, exploiting debates on Ukraine, the Middle East, and even U.S. elections to deepen societal fractures. German intelligence has also documented how Russia coordinates disinformation to undermine elections, employing a four-phase model that includes cloning reputable news sites, recruiting influencers, and circulating AI-manipulated videos in an attempt to erode trust in democratic institutions, amplify divisions, and ultimately influence policy decisions.

Campaigns now use AI-generated posts to target specific linguistic communities, such as French speakers in Africa, with tailored disinformation delivered through social media and messaging apps. Consumer-grade AI tools have enabled the mass-production of convincing fake images, videos, and counterfeit news sites, dramatically increasing the scale and believability of fabricated narratives. Russian operations have also deployed networks of AI-generated social media accounts in Western countries to automatically spread divisive messages and counterfeit grassroots support for pro-Kremlin positions.

While the immediate effectiveness of these campaigns can be limited, their cumulative effect—spreading confusion, polarizing societies, and weakening democratic resilience—aligns with Russia’s long-term ambition to reshape the global information order.

External References:

- Russia targeted French speakers in Africa with AI-generated posts, says French watchdog

- A Pro-Russia Disinformation Campaign Is Using Free AI Tools

- A Russian Bot Farm Used AI to Lie to Americans. What Now?

Disclaimer

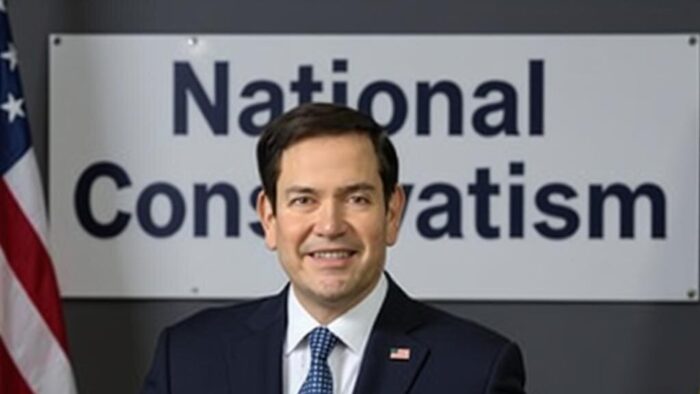

The Global Influence Operations Report (GIOR) employs AI throughout the posting process, including generating summaries of news items, the introduction, key points, and often the “context” section. We recommend verifying all information before use. Additionally, images are AI-generated and intended solely for illustrative purposes. While they represent the events or individuals discussed, they should not be interpreted as real-world photography.